The tale of a Supply Chain near-miss incident

TL;DR: We disclosed to Chainguard in December 2023 that one of their GitHub Actions workflow was...

Generative AI solutions and tools are being developed at a breakneck pace. Builders everywhere are doing whatever it takes to ship their products. Security left merely an afterthought. There are a myriad of concerns on the surface of these solutions including bias, privacy of information, and harmful output (i.e. hate speech). Looking deeper, issues can manifest within these solutions that are imperceptible. Imagine querying one of these solutions and receiving reasonable output in return. Unless you are a subject matter expert, the validity of the contents are likely to go over your head. You’re prone to instinctively trust the output. In some domains, this is harmless. Using code emitting from one of these solutions that is broken requires you to spend some time sharpening its rough edges. In biotechnology, a misdiagnosis from a generative AI solution might prescribe a medication or a certain procedure that could kill the patient. Generative AI is being built on a shaky foundation that could collapse at any moment. Is anyone paying attention?

Caught up in the hype of the new revolution brought on by Generative AI solutions, it is easy to convince yourself that the foundations these solutions are built on are novel and therefore you don’t comprehend them yet. The magician has no new tricks. Fundamentally, there are little to no differences between the applications you’ve been using on the web for years (e.g. YouTube, MySpace, etc.) and these new content machines. You’re still feeding inputs and getting outputs that you do something with. Now, it’s the machine within that gets to decide what the output is instead of a human. The revealing insight here is that traditional supply chain protections apply. Models are code. They receive input so that they can be trained. Once trained, they’re deployed so that consumers can use them. All of the threats that defenders have been paying attention to can be utilized within this new domain, along with some new and novel threats.

Security thought leaders and practitioners are racing to provide guidance for protecting this new technology by distributing lists of the top 10 concerns developers and defenders of gen AI solutions should be considering. Strings of words never placed together are forming such as “Prompt Injection” and “Hallucinating Agents” as phrases that you should be wary of. While practitioners continue to iterate on solutions that protect against those attacks, it’s equally valuable to look further into the supply chain of these solutions. Starting from the beginning of the chain with the data that will be used to train the model, the supply chain for gen AI solutions unsurprisingly concludes with the model being deployed to production. Let’s have a closer look at the beginning of the chain and key threat vectors you should be keeping your eye on.

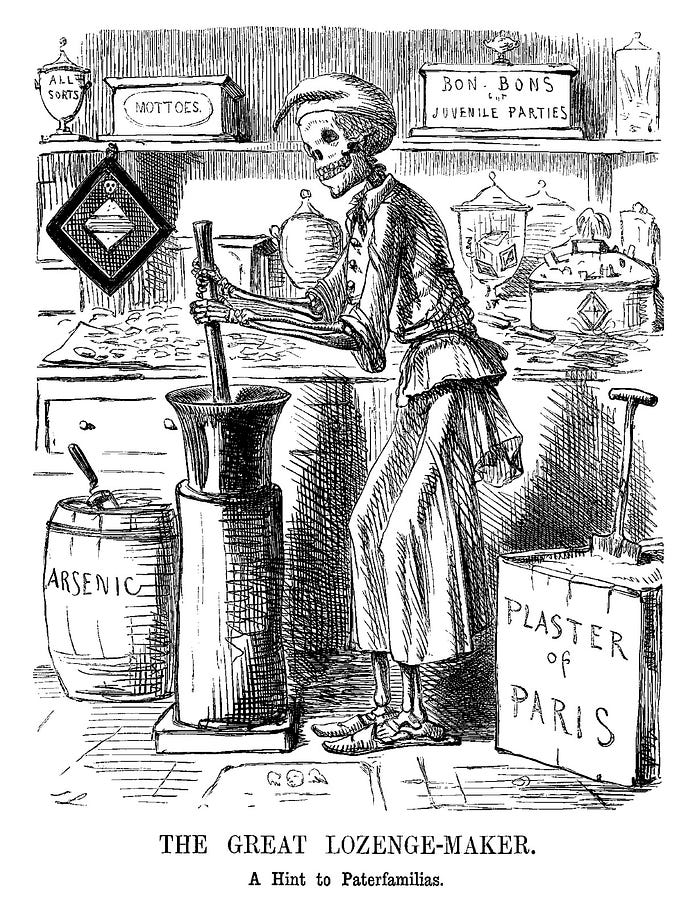

The phrase “data is the new oil” has never been more true than it is today. All AI applications are enabled because of data, the more of it, the more accurate results they can potentially generate. The enormous quantity of data that is now being harvested and utilized does not come without its own set of consequences. If you have been working in the data space, you are likely to be overly familiar with the fallacy that data is rarely ever in a working state until it has been cleaned and organized. The effectiveness of a model is, amongst other things, dependent on the quality, not just the quantity of data. This being said, cleanliness is certainly not an assumption many data practitioners are making when they begin to work with a dataset. An equally important yet less widely-discussed component within this process is checking the validity of the data. Of course the practitioner sifting through the data is implicitly checking for correctness, that’s not what I’m referring to here. The real threats lie in the real-world practice of managing data. Storing the data in unencrypted datastores, leaving those datastores exposed to the internet, not using encryption during transit, not obfuscating data, enabling those with access to the data to observe information that is likely to be private. The training data can be correct to a practitioner when it leaves their machine and is committed into a datastore and then corrupted by an adversary, maliciously manipulating the training data. This process is known as “Data Poisoning” and can have serious effects on generative AI applications, particularly in building trust with the users of these applications. Let’s dive deeper into this topic by examining a case of poisoned frogs, a hidden backdoor within a classifier, and vandalizing Wikipedia in an attempt to poison classifiers that use it as their corpus of training data.

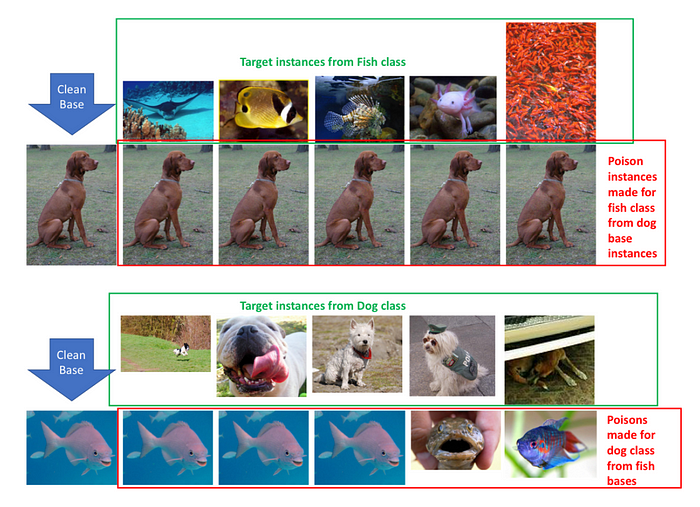

Imagine you are learning about a topic with AI and the teacher (AI) uses images to support text or to explain a concept. It is likely that you will not second guess the output that the AI is providing you at that moment. If it has given you images or text that aren’t truthful or incorrect, who is to know? This is damaging for your learning process, especially if the user is a child who would then have an even more damaged foundation. This attack, in which explicitly erroneous images could be chosen for specific prompts is highlighted in the paper “Poison Frogs! Targeted Clean-Label Poisoning Attacks on Neural Networks”. Researchers used “poisonous images”, images that appeared close enough to a base set of data but obviously was not correct under a specific label, to corrupt the training set of a model. In effect, the model is prone to generating unknowingly falsified output. Protecting against this type of attack involves validating the data before it is to be used in training, using signatures to measure its integrity. Even still, there are attacks within the realm of data poisoning that can occur if the data has been checked for authenticity, a certain flavor known as a backdoor.

AI will continue to push itself into more domains, automating processes or augmenting humans to do more things. One of the most obvious advantages to incorporating AI into your workflow is freeing your mindshare. Low impact, monotonous tasks that compete for your attention drains focus and energy. Offloading AI to make certain decisions clearly solves for this. However, we’re still at a point where humans must oversee the output AI is creating. In software development, this is reviewing the code that AI creates for correctness, idiomatic choices, and readability. In the paper “Planting Undetectable Backdoors in Machine Learning Models”, researchers create a fictional scenario where AI is responsible for making the decision of whether someone is able to secure a loan or not. By corrupting the classifier through the use of a backdoor, adversaries are able to alter decisions based on arbitrary pieces of information. For example, given a set of characteristics about an individual like the loan they’re requesting, their income, their current debt, their name, the backdoor would be able to give a loan to one individual whose name is “Sarah” and deny a loan to another individual with the name “Robert”. All characteristics being equal except for their name, this attack would easily slip through the cracks of a service measuring the integrity of a classifier. Defenses against backdooring live within research papers and as alluded to in the article, a backdoor can presumably be undetectable if constructed a certain way. It has been found that through the use of “Neuron Pruning”, a technique that removes neurons or connections within a neural network, defenders can adequately protect against backdoors. More research and analysis is warranted to understand both the attack and defense of this technique. Time is the most nefarious constraint, isn’t it? As developers seek to push work faster, security is an afterthought. In order to move faster, using the work of another is often taken as a shortcut. However, as previously mentioned, the data that is used might be corrupted and the consequences of not validating the integrity of data are serious.

Training models require a significant amount of data and compute, both expensive and time-consuming to obtain. For data, it’s not uncommon for model developers to use datasets previously curated as it’s likely they are both large enough and clean enough to yield workable results. One such corpus might be Wikipedia, a huge database of text that is constantly being updated and can readily be downloaded and used. The ease with which updates can happen to Wikipedia articles presents the double-edged sword in this scenario. The most current information can appear in the article, but is the information correct? Nicholas Carlini exposes this gap in a discussion on data poisoning and asserts that it’s possible to poison >5% of Wikipedia. By editing certain pages moments before snapshots are taken, adversaries can inject whatever they would like into the articles. As a result, those poisoned articles will be embalmed into the snapshot, poisoning whichever developer uses it. Prevention of this type of attack involves validating the cryptographic hash of the dataset that a developer is working with. In short, your default method of operation should be that you do not trust the dataset until you’ve validated that it matches your expectations.

In summation, data poisoning is a serious threat to the supply chain of generative AI solutions. The major ramification of not validating the integrity of data sources and building robust and secure data pipelines is a loss of trust in the application’s users. Incorrect output can result in a user abandoning a service or making a decision that can negatively impact them. While there are proposed solutions and continued research against data poisoning, further research in production environments is still necessary to identify solutions that AI engineers can use to ensure their models are free from harm. Even still, traditional defenses like a hardened pipeline and least privilege access to data still apply. Don’t abandon effort spent to ensure repositories have the right branch protections and that pipelines are running securely. The good news is that we don’t need radically new antidotes, data poisoning is merely another layer that we must pay attention to and can combat with the techniques practitioners have been using for quite some time.

TL;DR: We disclosed to Chainguard in December 2023 that one of their GitHub Actions workflow was...